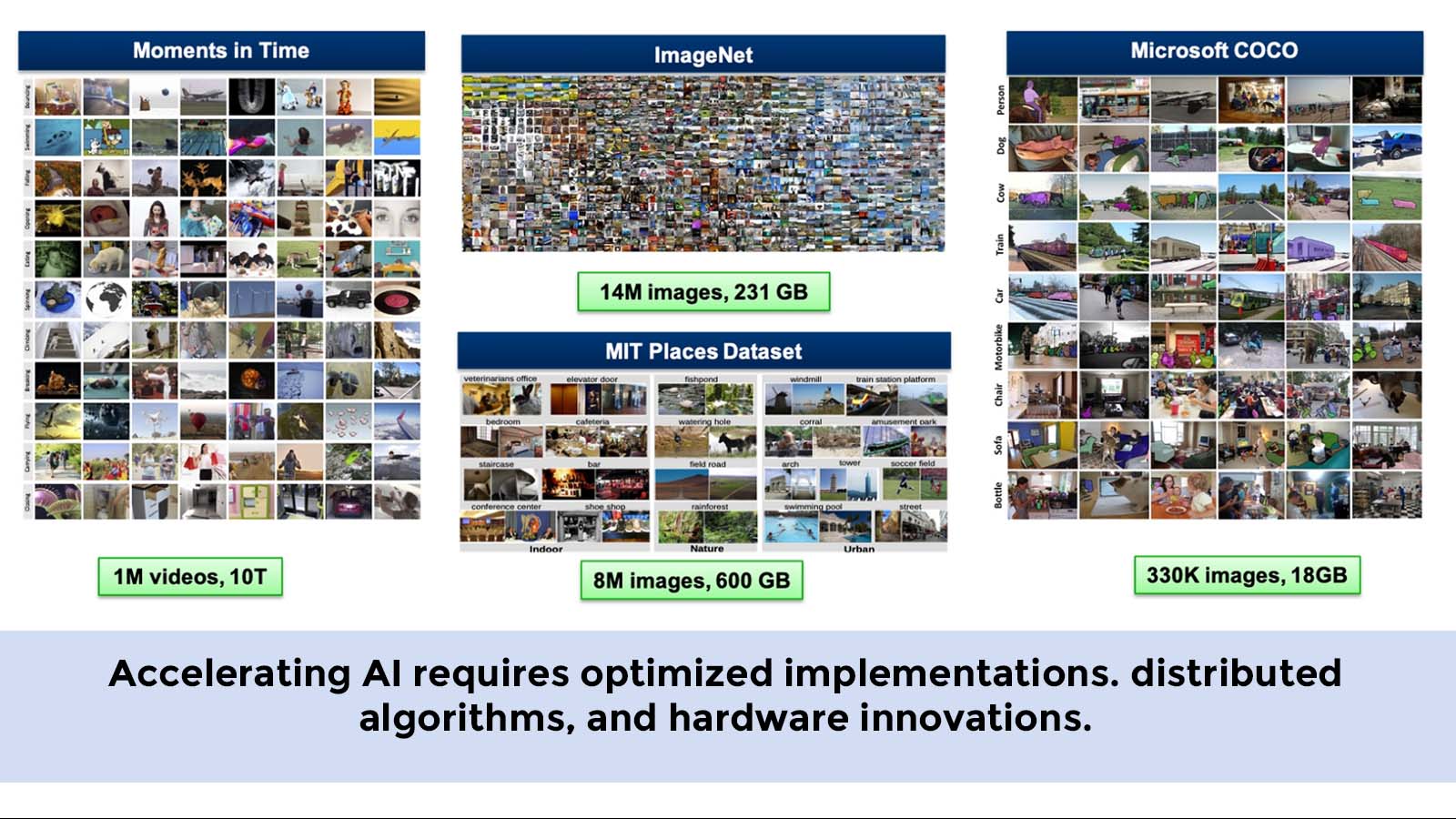

As it stands today, there is a large gap between the desire to develop and deploy artificial intelligence solutions and actually being able to prototype the solution. Building robust deep learning systems for AI requires massive amounts of labelled data and large amounts of compute. The LLSC is working to address the challenge of managing and integrating vast, diverse data sets; optimizing the performance of enormous training and inference calculations; and developing algorithmic techniques for large scale AI. This talk will present our results on optimizing the training of deep neural networks on a single processor and scaling up to hundreds to processors to enable rapid prototyping of AI.